Building a Secure AI Future with Subrosa - A Practical Guide

If you are reading this, you may be in the process of implementing AI systems in your organization. Perhaps you are concerned about the security implications of Generative AI (GAI), or you might be looking for guidance on how to ensure your AI deployments are both secure and compliant. No matter your specific situation, implementing an AI risk management framework should be a priority for any organization working with AI technologies. GAI presents significant security risks, including data leaks and compliance violations. Studies show that 71%1 of employees use Shadow AI, while 77% of security leaders identify potential data leakage as a major concern2. Without proper controls, organizations risk breaches and reputational damage. GAI’s evolving risks make mitigation challenging, demonstrating the need for a structured AI risk management framework.

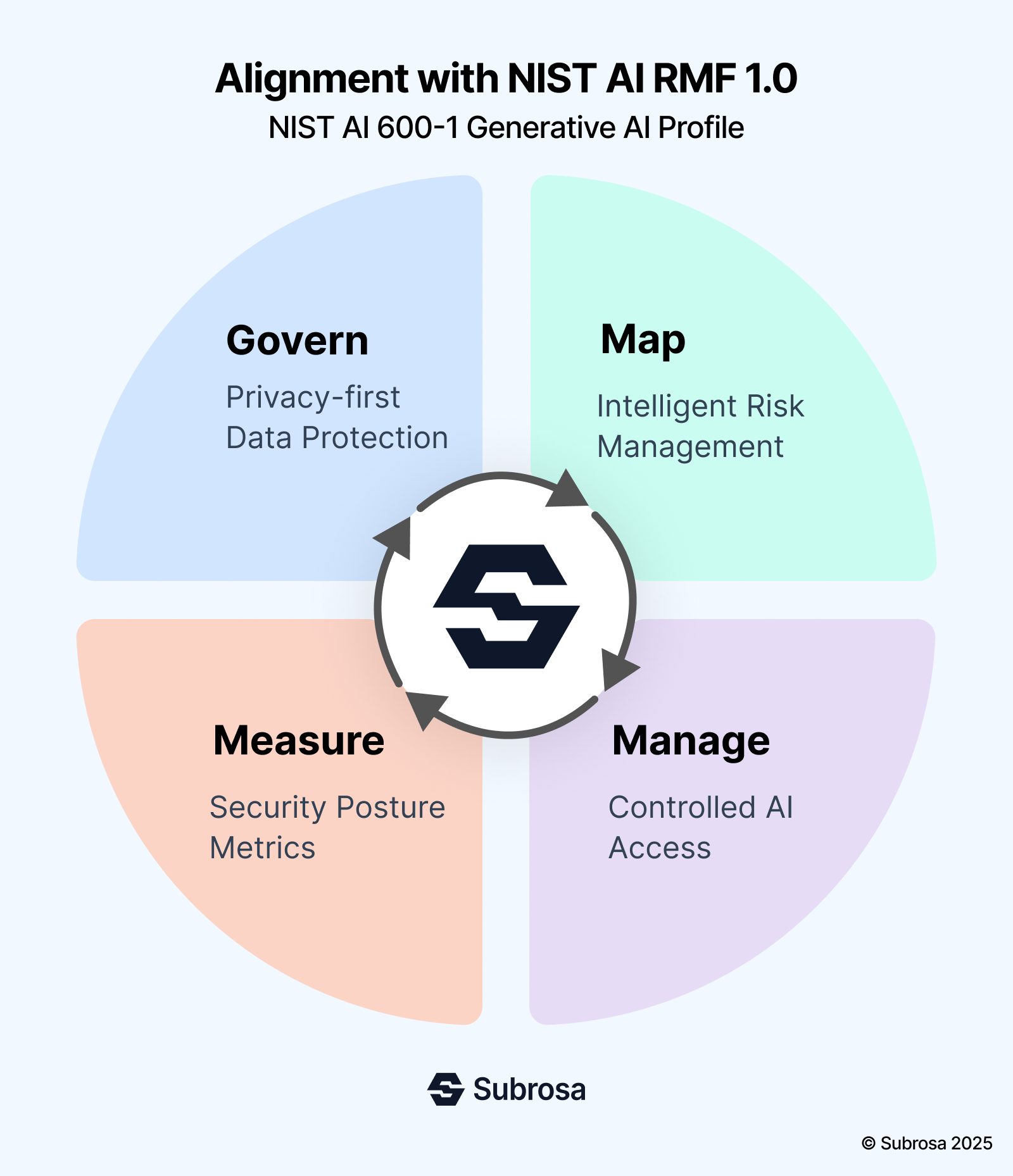

NIST AI Risk Management Framework (NIST AI 600-1)

The NIST AI Risk Management Framework offers structured guidance for incorporating trustworthiness into AI systems. It consists of four functions:

-

Govern – Establishes policies, roles, and accountability for AI risk management.

-

Map – Identifies AI system dependencies, contexts, and potential risks.

-

Measure – Assesses AI system risks through evaluation metrics.

-

Manage – Implements mitigation strategies and response measures.

Subrosa’s Alignment with the NIST AI Framework

Subrosa addresses GAI risks by securing sensitive data, enforcing governance policies, and ensuring continuous monitoring and access control. It maps to high priority controls outlined in the NIST AI 600-1 framework, supporting compliance, transparency, and security across AI deployments.

| Function | Subrosa Alignment |

|---|---|

| Govern | Subrosa anonymizes sensitive data before AI processing, ensuring compliance and privacy while maintaining AI usability. Real-time monitoring detects and secures sensitive data automatically |

| Map | Subrosa provides AI visibility by tracking and mapping interactions. Identifies accessed AI tools, processed data, and information-sharing risks. |

| Measure | Subrosa monitors AI interactions in real-time to detect unauthorized use and data exposure risks before they escalate. |

| Manage | Subrosa implements granular access controls, tracks interactions at user and device levels, and ensures rapid incident response through automated workflows. |

Subrosa's security controls provide coverage of Govern, Map, Measure and Manage requirements outlined in the NIST AI RMF; NIST AI 600-1 framework for generative AI. The controls work together to enable effective governance, monitoring, and protection of AI system use while addressing security risks around data protection, privacy and compliance. The mapping demonstrates alignment between Subrosa's capabilities and industry framework requirements, thereby enabling organizations to implement appropriate controls for secure and compliant AI adoption.

Governance

| Subrosa Feature | Framework | Control | Summary | CSF |

|---|---|---|---|---|

| Data Transparency and Visibility ensures accountability in AI data usage and reduces IP risks | NIST AI RMF GV-1.2-001; AI 600-1. | Establishes Transparency policies for tracking data origins and history | Balances proprietary data protection with digital content integrity | Identify Protect |

| Establishes clear acceptable use policies and monitoring | NIST AI RMF GV-1.4-002; AI 600-1 Governance | Mitigates misuse and illegal applications of GAI | Establishes transparent acceptable use policies for GAI that address illegal use or applications of GAI. | Identify Protect |

| Enhanced AI Incident Response to Strengthen Mitigation of AI-Related Data Leaks | NIST AI RMF GV-1.5-002; AI 600-1. | Establishes policies for incident reviews and adaptive security measures | Establishes organizational policies and procedures for after action reviews of GAI system incident response and incident disclosures, to identify gaps; Update incident response and incident disclosure processes as required | Respond Recover |

| Prevents hidden risks from untracked AI models including shadow AI | NIST AI RMF GV-1.6-002; AI 600-1. | Establishes exemption criteria for AI components in external software | Defines any inventory exemptions in organizational policies for GAI systems embedded into application software | Identify, Protect |

| Reduces misuse of AI-driven interactions with outbound data policies | NIST AI RMF GV-3.2-003; AI 600-1. | Enables criteria for strict policies on chatbot interactions and AI decision-making tasks | Defines acceptable use policies for GAI interfaces, modalities, and human-AI configurations (i.e., for chatbots and decision-making tasks), including criteria for the kinds of queries GAI applications should refuse to respond to. | Identify, Protect |

Map

| Subrosa Feature | Framework | Control | Summary | CSF |

|---|---|---|---|---|

| Test & Evaluation of Data Flows to prevents unauthorized data usage and privacy violations | NIST AI RMF MP-2.1-002; AI 600-1. | Compliance with data privacy, intellectual property, and security regulations | Institutes test and evaluation for data and content flows within the GAI system, including but not limited to, original data sources, data transformations, and decision-making criteria. | Protect, Detect |

| Reduces risks from unreliable or tampered upstream data sources, with partial validation of data submitters but not yet applicable to the builder side | NIST AI RMF MP-2.2-001; AI 600-1. | Traceability and ensures data integrity for AI-generated content to validate who submitted the given data | Identifies and document how the system relies on upstream data sources, including for content provenance, and if it serves as an upstream dependency for other systems. | Identify, Detect |

| Prevents unintended data leaks and content manipulation risks | NIST AI RMF MP-2.2-002; AI 600-1. | Ensures that external dependencies do not compromise information integrity | Observes and analyse how the GAI system interacts with external networks, and identify any potential for negative externalities, particularly where content provenance might be compromised | Protect, Detect |

| Provides Human-GAI Configuration Oversight to identify issues in human-AI interactions | NIST AI RMF MP-3.4-005; AI 600-1. | Implements systems to track and refine human-GAI outcomes | Establishes systems to continually monitor and track the outcomes of human-GAI configurations for future refinement and improvements. | Detect, Respond |

| Prevents exposure of Personal Identifiable information (PII) and sensitive data with AI-Generated Content Privacy Monitoring | NIST AI RMF MP-4.1-001; AI 600-1. | Ensures AI-generated content adheres to privacy standards and compliance requirements | Conducts periodic monitoring of AI-generated content for privacy risks; address any possible instances of PII or sensitive data exposure. | Detect, Protect |

| Creates a unified governance framework for AI systems to align AI security with enterprise governance | NIST AI RMF MP-4.1-003; AI 600-1. | Provides GAI Governance Integration | Connects new GAI policies, procedures, and processes to existing model, data, software development, and IT governance and to legal, compliance, and risk management activities. | Identify, Protect |

| Detects PII & Sensitive Data in AI Output to prevent leakage of confidential data | NIST AI RMF MP-4.1-009; AI 600-1 Map | Uses automated tools to scan AI-generated text, images, and multimedia for PII | Leverages approaches to detect the presence of PII or sensitive data in generated output text, image, video, or audio. | Detect, Protect |

Measure

| Subrosa Feature | Framework | Control | Summary | CSF |

|---|---|---|---|---|

| Anonymizes data and removes personally identifiable information (PII) to protect privacy in AI content tracking | NIST AI RMF MS-2.2-002; AI 600-1. | Ensures Content Provenance Data Management with documentation | Documents how content provenance data is tracked and how that data interacts with privacy and security. Consider: Anonymizing data to protect the privacy of human subjects; Leveraging privacy output filters; Removing any personally identifiable information (PII) to prevent potential harm or misuse. | Identify, Protect |

| Provides Privacy-Enhancing Techniques for AI Content and Implements anonymization and differential privacy | NIST AI RMF MS-2.2-004; AI 600-1. | Reduces risks of AI content attribution to individuals | Uses privacy-enhancing technologies to prevent AI-generated content from linking back to individuals | Identify, Protect |

Manage

| Subrosa Feature | Framework | Control | Summary | CSF |

|---|---|---|---|---|

| Provides Responsible Synthetic Data Use to prevent outbound data privacy violations | NIST AI RMF MG-2.2-009; AI 600-1. | Uses privacy-enhancing techniques and synthetic data that mirrors real-world statistical properties without revealing PII | Reduces exposure to personal data while maintaining dataset diversity | Protect, Detect |

| Monitors post-deployment risks by tracking outgoing data to AI models, identifying cyber threats and confabulation risks | NIST AI RMF MG-4.1-002; AI 600-1 Manage | Continuous monitoring processes for AI systems post-deployment | Establishes, maintains, and evaluates effectiveness of organizational processes and procedures for post-deployment monitoring of GAI systems, particularly for potential confabulation, CBRN, or cyber risks. | Detect, Respond |

Footnotes

-

CIO.com 2024 https://www.cio.com/article/648969/shadow-ai-will-be-much-worse-than-shadow-it.html?ref=hackernoon.com\&=1 ↩

-

State of Security 2024: The Race to Harness AI- Splunk, https://www.splunk.com/en\_us/campaigns/state-of-security.html ↩